Hello! I’m Inpyo Song, a master’s student researching computer vision and artificial intelligence, advised by Prof. Jangwon Lee. My goal is to develop AI that truly works in the real world. Rather than models that only perform well in controlled laboratory settings, I’m focused on creating vision systems that can operate reliably in complex, unpredictable real-world environments. Currently, I’m working on object tracking, anomaly detection, human pose estimation, and traffic accident anticipation in autonomous driving. My research centers around one core question: how can we build AI vision models that are robust enough to handle the messy, chaotic nature of the real world? Also, I am honored to collaborate with Prof. David Crandall from Indiana University Bloomington.

You can find my CV here. I am always open to any form of collaboration. If you have any ideas for potential collaboration, or just feel like having a casual chat, please feel free to reach out!

Actively looking for a PhD positions starting from 2026 Fall.

🔥 News

- Aug. 2025: 🏆 Received the Best Graduate Research Award at the 2025 Digital Innovation Talent Symposium.

- May. 2025: 🎉 Two papers accepted at ICIP 2025.

- Feb. 2025: 🏆 Selected for I-Corps Korea Program

- Dec. 2024: 🎉 One paper accepted at WACV 2025.

- Oct. 2024: 🎉 SFTrack presented as Long Oral Presentation at IROS 2024.

- Oct. 2024: 🏆 Received Excellence Award at SKKU Graduate Student Start-up Competition.

- Sep. 2024: 🎉 One paper accepted at CVIU.

- Aug. 2024: 🏆 Received President’s Award from IITP at 2024 Digital Innovation Talent Symposium.

- Jun. 2024: Thrilled to join the Indiana University Bloomington CVLab as an intern!

- Jun. 2024: 🎉 One paper accepted at IROS 2024.

📝 Publications (Selected)

-

Real-Time Traffic Accident Anticipation with Feature Reuse

Inpyo Song, Jangwon Lee

ICIP'25 | [Project page] | [paper] | [arXiv] | [code] -

PawPrint: Whose Footprints Are These? Identifying Animal Individuals by Their Footprints

Inpyo Song, Hyemin Hwang, Jangwon Lee

ICIP'25 | [Project page] | [paper] | [arXiv]) | [code] -

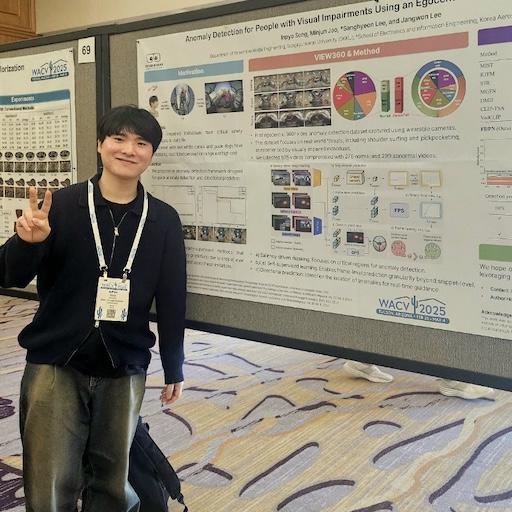

Anomaly Detection for People with Visual Impairments Using an Egocentric 360-Degree Camera

Inpyo Song, Sanghyeon Lee, Minjun Joo, Jangwon Lee

WACV'25 | [Project page] | [paper] | [arXiv] | [code] -

(Long Oral) SFTrack: A Robust Scale and Motion Adaptive Algorithm for Tracking Small and Fast Moving Objects

Inpyo Song, Jangwon Lee

IROS'24 | [Project page] | [paper] | [arXiv] | [code] -

Action-conditioned Contrastive Learning for 3D Human Pose and Shape Estimation in Videos

Inpyo Song, Moonwook Ryu, Jangwon Lee

CVIU'24 (IF: 4.3) | [paper] | [code] -

Motion-aware Heatmap Regression for Human Pose Estimation in Videos

Inpyo Song, Jongmin Lee, Moonwook Ryu, Jangwon Lee

IJCAI'24 (14.0 % acceptance rate) | [paper] -

Video Question Answering for People with Visual Impairments Using an Egocentric 360-Degree Camera

Inpyo Song, Minjun Joo, Joonhyung Kwon, Jangwon Lee

CVPRW'24 | [arXiv]

🎖 Honors and Awards

- Aug. 2025 Best Graduate Research Award, 2025 Digital Innovation Talent Symposium, Ministry of Science and ICT

- Feb. 2025 Selected for I-Corps Korea Program, (VLM-based Arduino Tutor)

- Oct. 2024 Excellence Award, SKKU Graduate Student Startup Competition (Multimodal VQA-Based Tutoring System for the Digital Transformation of Hands-On Education)

- Aug. 2024 President’s Award from IITP, 2024 Digital Innovation Talent Symposium (Motion-Aware Heatmap Regression for Human Pose Estimation in Videos)

- Feb. 2024 Encouragement Award, SKKU Research Matters (Technological Social Responsibility: Anomaly Detection for People with Visual Impairments Using an Egocentric 360-Degree Camera)

- Dec. 2022 Grand Award from SKKU, KAU Start-up Idea Competition (Generating Digital Twin using Instance-NeRF)

💻 Internships

- Jun. 2024 - Aug. 2024, Research Intern @ Indiana University Bloomington CVLab, Bloomington, IN, USA

🤝 Services

- Reviewer, CVPR 2026

- Reviewer, AAAI 2026

- Emergency Reviewer, WACV 2025

- Emergency Reviewer, ICDL 2024